How to Upgrade a RKE2 Cluster

gracefully, properly, and easily

Lately, I have been doing a ton of work with Rancher and RKE2. What’s the difference you might ask? It’s a good question.

According to the official docs:

Rancher is a Kubernetes management tool to deploy and run clusters anywhere and on any provider.

Rancher can provision Kubernetes from a hosted provider, provision compute nodes and then install Kubernetes onto them, or import existing Kubernetes clusters running anywhere.

~ https://ranchermanager.docs.rancher.com

So Rancher is the provisioner/manager/frontend for Kubernetes.

What is RKE2?

RKE2, also known as RKE Government, is Rancher's next-generation Kubernetes distribution.

~ https://docs.rke2.io

Great. RKE2 is the Kubernetes distro/engine.

In this stack (I am going to start calling my posts “stacks” since that’s the more accurate term) we are going to upgrade an RKE2 cluster with the help of Rancher.

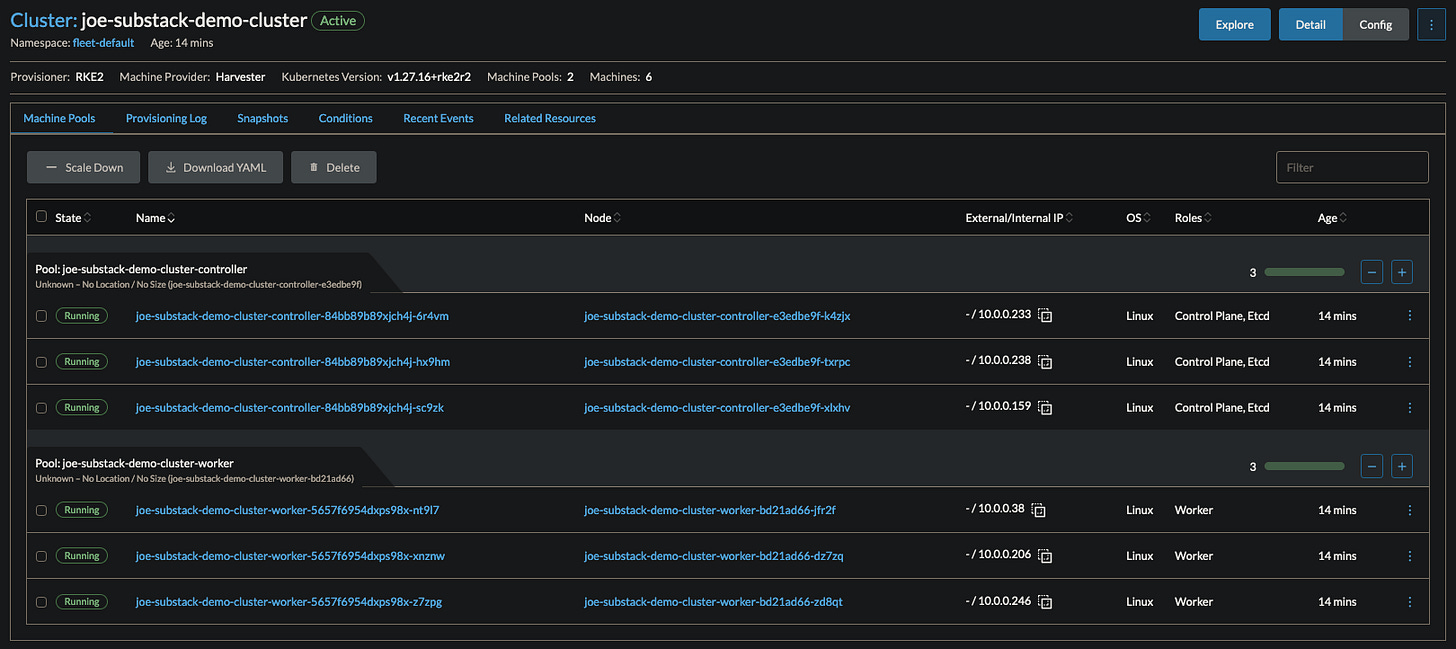

It just so happens that I have a cluster already spun up. It is running RKE2 version v1.27.16+rke2r2 and we want to go to v1.31.1+rke2r1.

The cluster has 3 controller and 3 workers. It was created by using the built in Harvester driver. Rancher sees Harvester as a cloud provider and creates clusters on top of Harvester.

It turns out that how you spin up the cluster is the most important part of this whole equation. If you SSHed into the machine and installed RKE2 using the binary as described here, your upgrade process will be slightly different from the process I am going to show.

In my experience, most engineers like to use Rancher to directly create/manage/destroy clusters. You can use the various drivers that are available in Rancher (AWS, Digital Ocean, Linode, OCI, etc).

At the end of the day, Rancher is running the binary on your behalf and is adding variables based on what you define.

For example, as I mentioned earlier, I am using the Harvester driver. I configured some creds on the rancher install, added the cluster-registration-url to my Harvester install, and boom. My rancher can talk to my Harvester. Sorry if it’s too simplistic of a view but this is not in the scope of this stack.

So we have an RKE2 cluster that was provisioned by Rancher on Harvester. How do we upgrade?

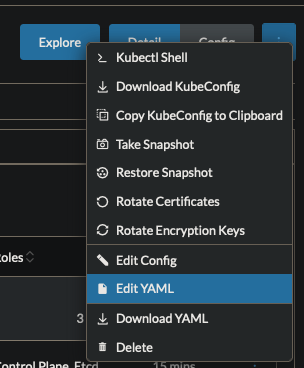

Notice the version is v1.27.16+rke2r2. If we click on the three dots in the top right and choose “Edit YAML”

We get the following:

Most stuff is not important here. What is important is that the version of RKE2 is defined! Let’s overwrite it.

And click “Save”

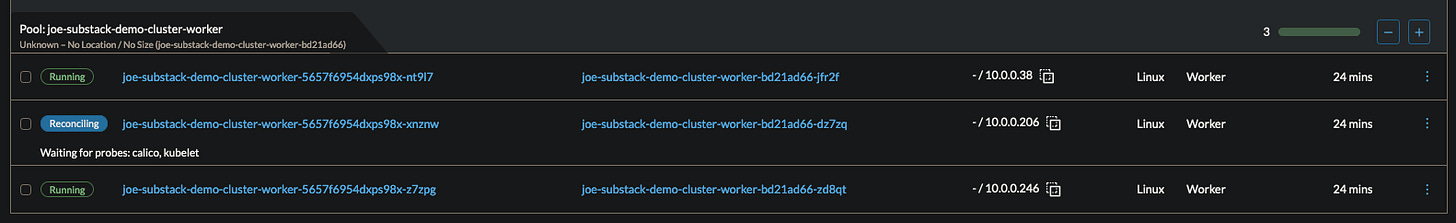

The cluster begins upgrading.

Since we have 3 controllers, each one will be upgraded, and put back into rotation one by one.

The same goes for the worker nodes.

A recomended setting to change at cluster creation or before an upgrade is to have the nodes drain before an upgrade. This will make sure that there is no downtime (there *should be* no downtime).

Let’s check in on the logs:

One of the controllers has been upgraded and the second is in the process. In this scenario it only waited for two to be upgraded before going on to the next step of upgrading the workers.

The upgrade is relatively simple. The new binary is downloaded and installed. RKE2 is restarted with the new binary and the various services come up and check in.

Next, the workers begin to upgrade.

And boom. We are done. Here are the full logs:

The entire upgrade took 10 minutes. Not too bad. If these nodes were drained before upgrade it would have taken longer.

Let’s run some commands to see more info:

Great. The version shows that the upgrade was successful.

Although this method may not work for every cluster. It will work for clusters that are managed by Rancher.

Have fun upgrading to latest (errr stable).

Cheers,

Joe