Another day, another reason to switch exclusively to Fleet for my GitOps needs. This time I am looking at how I can deploy Harvester resources (OS images, cloud init files, storage classes, etc…) using Fleet instead of Argo CD.

In my recent article about Fleet, I specifically focused on using Fleet in an RKE2 scenario. I created a cluster and deployed resources to that cluster using Fleet.

In this article, I want to manage my Harvester environment with Fleet. Harvester is really just an RKE2 cluster with some magic manifests installed on top of it.

First things first, how have I set up my environment and how can you replicate.

I am using an “Experimental” addon to Harvester in my cluster called “Rancher Manager” or Rancher VCluster. Essentially, this add on “runs a nested K3s cluster in the rancher-vcluster namespace and deploys Rancher to this cluster” thus eliminating the need to spin up Rancher on a Harvester VM.

This addon is awesome and I highly recommend it. I configure Harvester to talk with this rancher environment and now I can install RKE2 clusters to Harvester using the Harvester Cloud Provider.

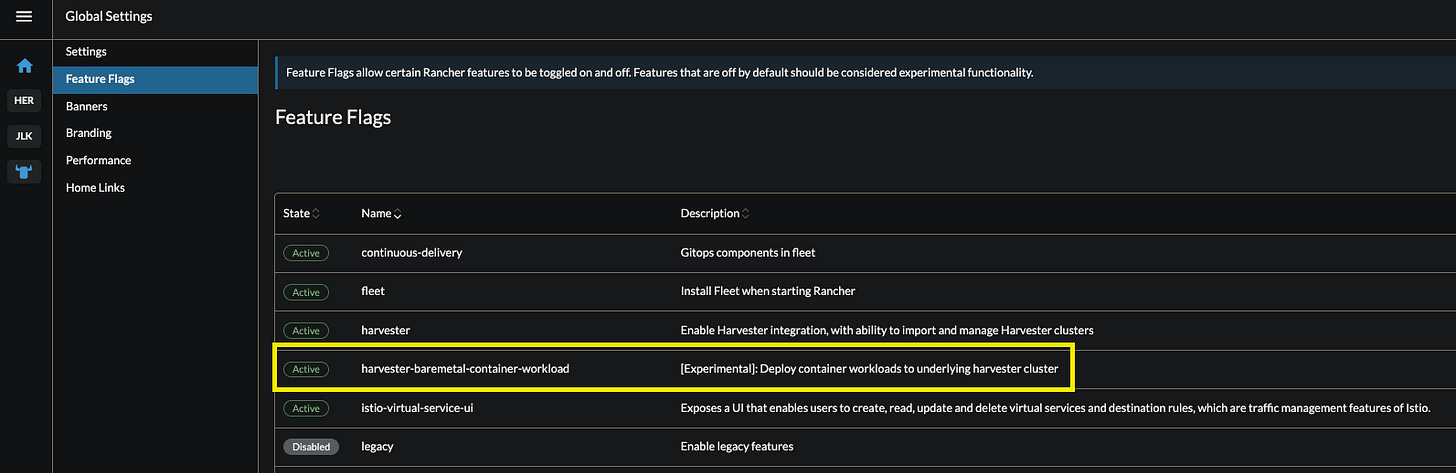

Next, we navigate to “Global Settings → Feature Flags” and we enable the “harvester-baremetal-container-workload” flag which allows us to “deploy container workloads to underlying harvester cluster”.

This flag allows us to schedule DIRECTLY to the root level RKE2 cluster that Harvester is running on.

In the past, I have installed Argo CD to the root level cluster and used that for applying various settings and manifests. After all, everything in Harvester is essentially a Kubernetes component.

Let’s now head over to the “Continuous Delivery” tab in Rancher, Fleet. For a tutorial and explainer on Fleet, make sure to read my recent article.

I have a public repo here that has some interesting manifests:

images.yml - deploys two OS images to Harvester

recurring-snap-cleanup-per-hour.yml - deploys a Longhorn job that cleans up system generated snapshots every hour

recurring-snap-delete-per-hour.yml - deploys a Longhorn job that cleans up user created snapshots every hour

ssd-premium-storage-class.yaml - an SSD storage class that has 3 replicas

ssd-storage-class.yaml - an SSD storage class that has 1 replica

ubuntu-cloudinit.yml - a basic ubuntu cloud init file to be used with Harvester VMs.

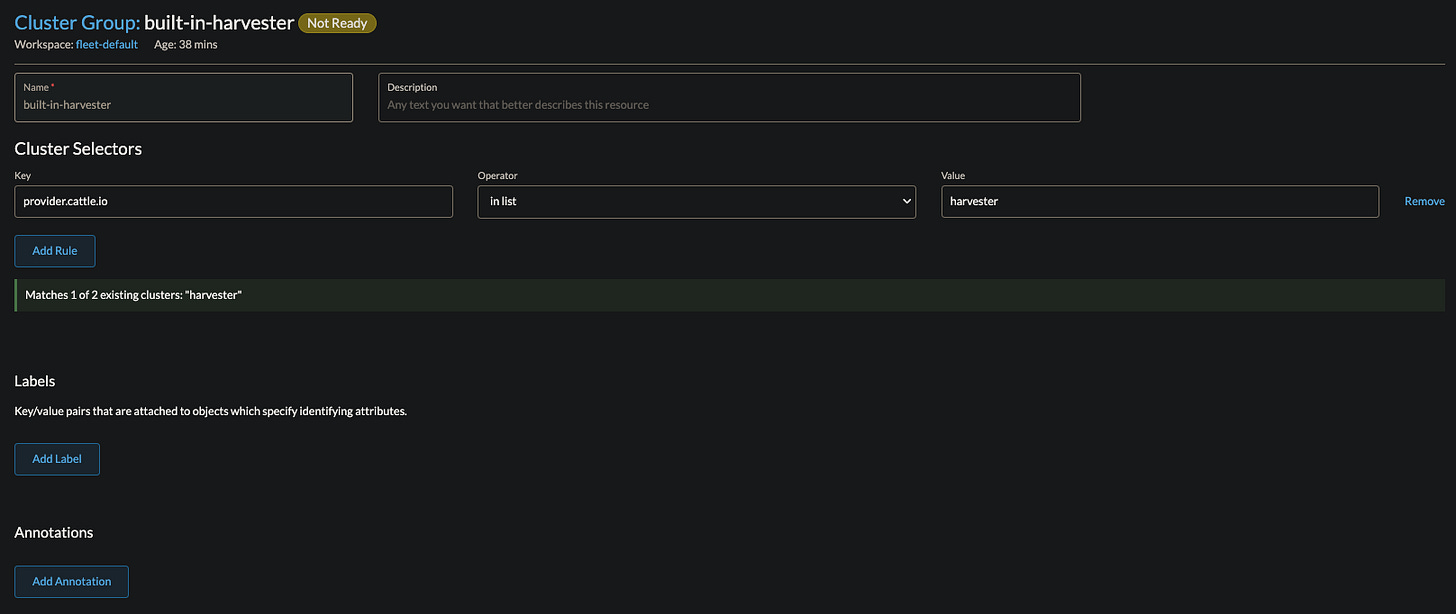

In order to apply these manifests, we need to first make a cluster group.

I am naming the cluster group as “built-in-harvester” and I am adding the Harvester cluster selector “provider.cattle.io: harvester.” This is the only cluster with that tag.

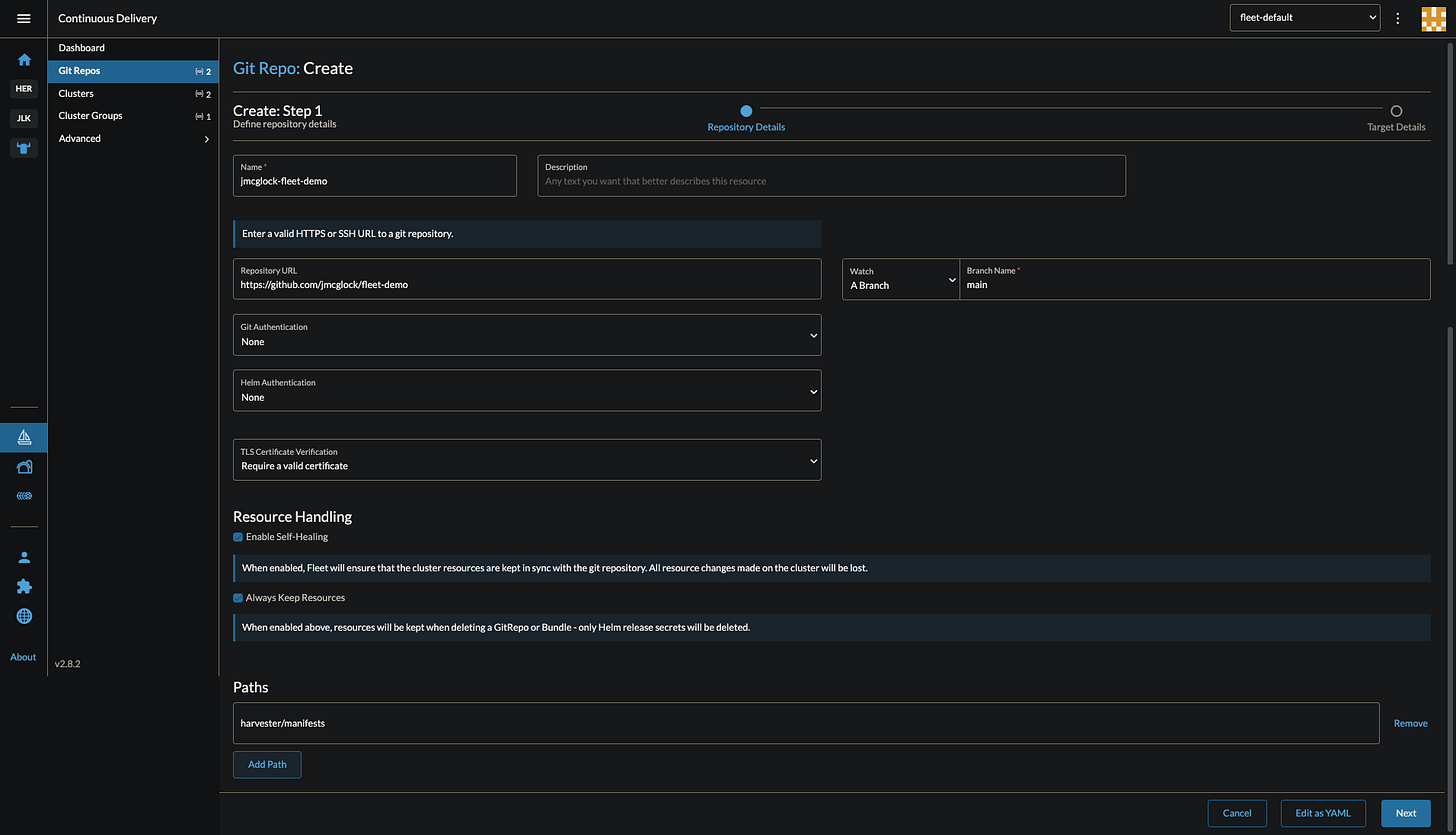

Now, we can create the repo, and target the cluster group.

If you are using my fleet-demo repo, you will want to set it up like this:

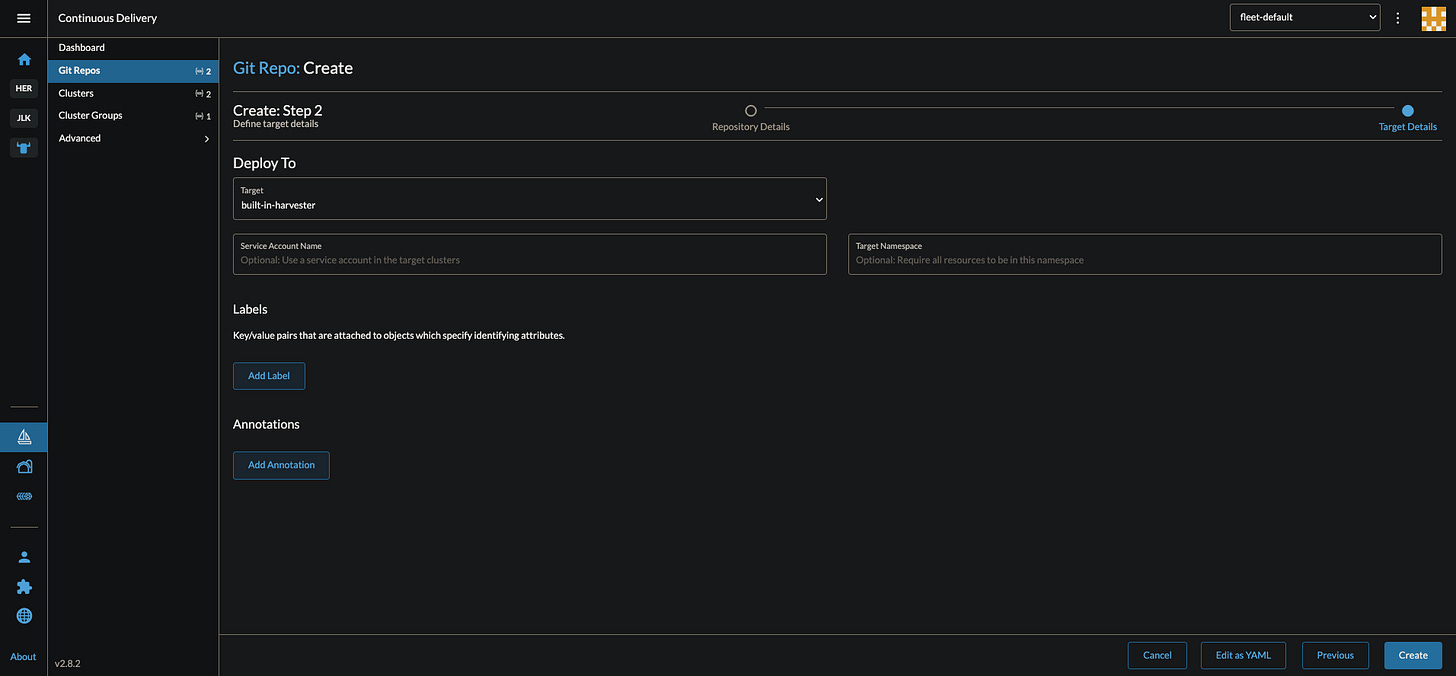

On the next page, we will target the cluster group:

And there we go! The resources will begin applying to the underlying Harvester cluster.

In my case, the OS images were already present in the cluster so they errored out complaining that: “VirtualMachineImage "jammy-default" in namespace "default" exists and cannot be imported into the current release.” All good! It worked well besides that.

I continue to march towards the fully automated GitOps home lab!

Cheers,

Joe